Building Efficient Software Test Reports

One of the essential jobs of a test team is to provide detailed reporting when completing testing assignments of a project. The test team has very specific goals and deliverables to follow, and healthy and detailed software test reports will be the evidence to demonstrate the state of the work and the results of the outcome. Test Reports are typically combined and delivered by the project’s test lead or manager to core project stakeholders on a frequent basis.

The Software Test Reports are documents that contain a summary of all test activities and test results. It should provide an assessment of how well the testing is performed or where the needs still need to be met. Based on the test report, stakeholders will evaluate the quality of the tested product and make decisions on the next steps of the software delivery.

What goes into a test report?

Software test reports should contain the necessary details that stakeholders are looking for in frequent updates. Keep in mind that all information listed should support making decisions on next steps for the project. Typical areas include:

Objective

Information on what the testing project includes and deliverables to expect. This information should include the project/product name, version, and description of the project.

Example:

| Project | Project Razor |

| Description | Project Razor is a cryptocurrency application made for Android devices. |

| Deliverables |

Conduct testing of all new features in v1.001 of Razor deployment Execute testcases according to test plan Document defects found in testing milestones, organized by priority and severity Test summary Report and evaluation |

| Schedule | Oct 2021 – Jan 2022 |

Test Environment

Describe the environment where the application or device is being tested in, listing variables, scenarios, and timeline of testing. This could be how the test lab is setup, how many testcases are executed at, what networking or environmental variables are needed to conduct the testing.

Example:

- Running a android emulator on 100 mobile devices connected serially

- Performing automated regression tests under a CI environment

- Network testing under a 5G LTE provider

Critical Issues / Blockers

Critical issues and blockers should be identified in its own section, to call attention to the audience of any situations from the testing that requires immediate attention. These are typically more severe issues that the Test team wants to highlight up front and prepare for a discussion about defending why these issues are critical. These can also be further summarized later in the software test reports under the Test summary and defects.

Test Summary

This section should summarize the testing activity that was performed within the session. Be sure to use charts, graphs, and numbers to provide evidence supporting your summary. This generally will highlight metrics and data that would capture areas like:

Number of test cases executed

Test passes and Test failures

Types of devices, platforms, applications tested

Additional comments

Defects

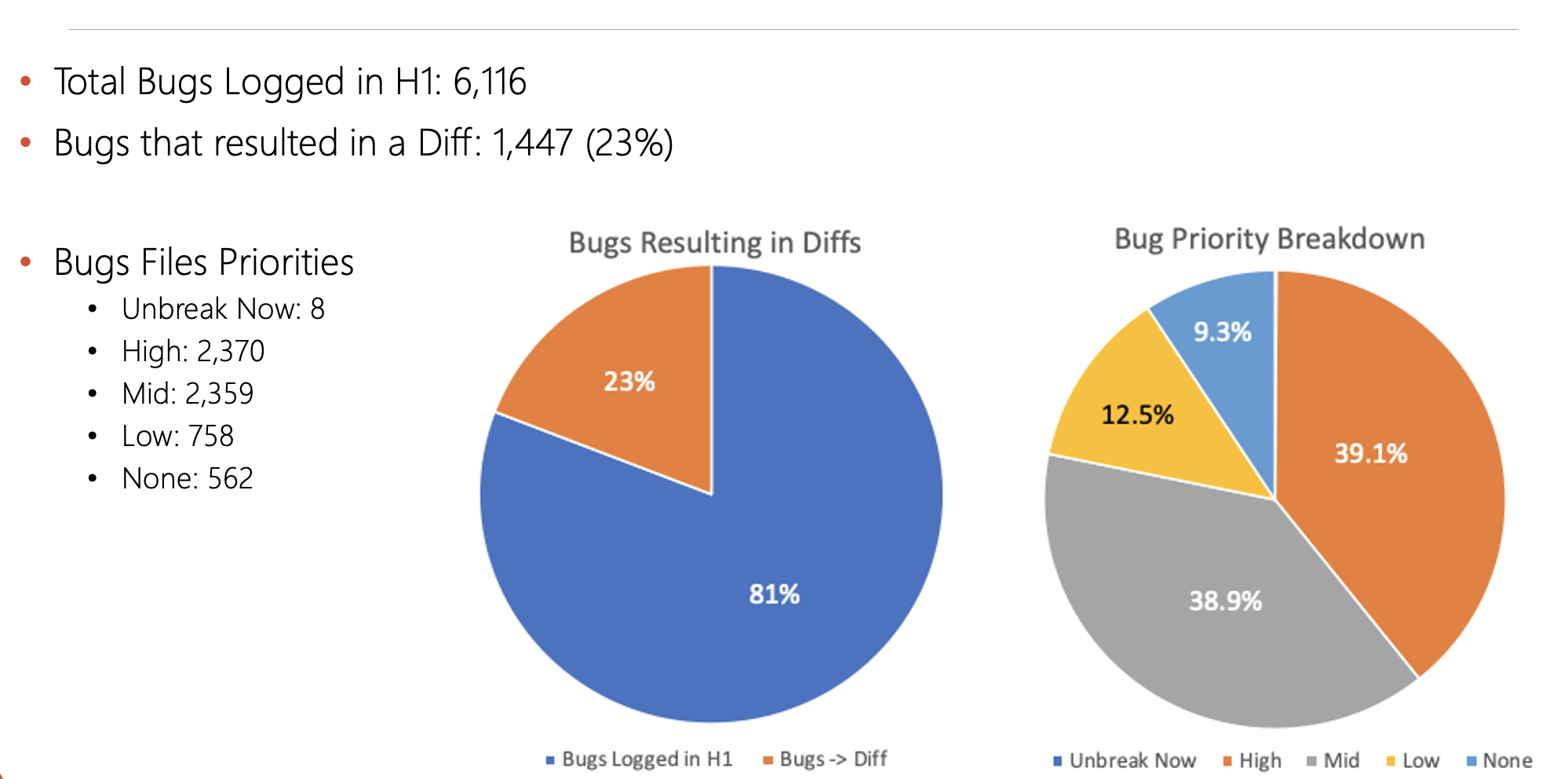

Defects help measure the health and progress of the project and should always be a critical metric that is reported in all software test reports. However, there are many aspects of defects, and you should first consider what information is warranted when presenting bug data to the stakeholders. The most common conditions when reporting defects are:

- Number of defects found in the latest test cycle

- Breakdown of priority and severity of these defects

- Bug-to-fixed ratio

Example:

Additional Areas to Include:

- Defect Removal Efficiency

- Escaped Defects percentage

- Test Case efficiency

- Defect Resolution Time

- Time to test

- Team metrics, broken down by individuals or project groups

You can find many of the defects definition in this post from Thinksys.

Best Practices on writing a good SOFTWARE TEST REPORTS

- Always follow a standardized template and report consistently during frequent updates. Stakeholders want to be able to look at summarized data that consistently tells a story and can quickly determine week over week metrics that paint a story. By keeping a consistent format that is familiar to everyone, decision making can be made quicker, and expectations follow the plan.

- Software test reports should be clear and concise, and fully understandable. Supplement your data with graphics and charts, and back up your story with time-series data to depict a bigger picture of trends and movement.

- Describe the testing activity in detail to show what testing was performed but keep it brief and to the point. Leave out any abstract information and additional data in an appendix or follow up documentation.

- Keep in mind software test reports should not be an essay about the project activity. Instead, it should summarize the test results and focus on the main objective of the testing activity.

- Finally, when delivering software test reports, remember to know who your audience is ahead of time and prepare your report for the right group. Your presentation should be different when communicating to an internal project team versus an executive level crowd. More details and specifics will be recognized by a project team, whereas high level summary and blocking issues would be more of interest to directors and VPs. Be sure you can defend the data presented and prepare for discussions that may come from left field that are unexpected. Remember, software test reports are there to support the work that the test team has performed, and it is in the best interest of your audience to understand what they are seeing and have a healthy discussion if a product is quality ready.